SCYLLA: QoE-aware Continuous Mobile Vision with FPGA-based Dynamic Deep Neural Network Reconfiguration

Aug 4, 2020·

,

,

,

,

,

,

·

0 min read

Shuang Jiang

Zhiyao Ma

Xiao Zeng

Chenren Xu

Mi Zhang

Chen Zhang

Yunxin Liu

Abstract

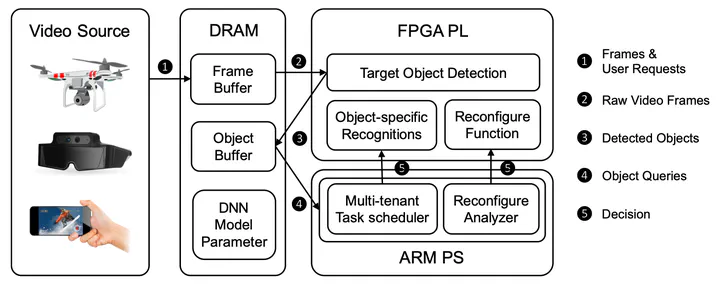

Continuous mobile vision is becoming increasingly important as it finds compelling applications which substantially improve our everyday life. However, meeting the requirements of quality of experience (QoE) diversity, energy efficiency and multi-tenancy simultaneously represents a significant challenge. In this paper, we present SCYLLA, an FPGA-based framework that enables QoE-aware continuous mobile vision with dynamic reconfiguration to effectively address this challenge. SCYLLA pre-generates a pool of FPGA design and DNN models, and dynamically applies the optimal software-hardware configuration to achieve the maximum overall performance on QoE for concurrent tasks. We implement SCYLLA on state-of-the-art FPGA platform and evaluate SCYLLA using drone-based traffic surveillance application on three datasets. Our evaluation shows that SCYLLA provides much better design flexibility and achieves superior QoE trade-offs than status-quo CPU-based solution that existing continuous mobile vision applications are built upon.

Type

Publication

In IEEE Conference on Computer Communications 2020